Category: Linguistics

-

If You’re A Linguist, Dont’ Sweat The Formal Math (Too Much) in Natural Language Processing

In the realm of Natural Language Processing (NLP), a foundational understanding of linear algebra does help (somewhat) when trying to make money with NLP. Fundamentally, the concern for all us Philosophers, Linguists and Psychologists is to earn a decent living while not being penalized for our lack of immediate gratification in the workforce. The overarching…

-

Alucinación – el termino para cuando los modelos de inteligencia artificial se equivocan

Aunque es impresionante el hecho de que un chatbot responde a un input, académicos, científicos y expertos en la aplicación de la inteligencia artificial no han definido su postura con respeto al IA en términos psicológicos. La ciencia cognitiva bien fue la inspiración para las llamadas ‘redes neuronales’ que definen la arquitectura de algunos de…

-

Italy Blocks OpenAI Chatbot for Violating Consumer Data Protection Law

Italy has taken a decisive step to protect its citizens from the potential misuse of artificial intelligence technology, blocking the ChatGPT tool, belonging to the American technology company OpenAI. On Friday, the Italian Data Protection Authority (GPDP) suspended the ChatGPT tool with immediate effect, citing a data breach on March 20 concerning its users and…

-

Exploring the Boundaries of AI: Apple Investigates Generative AI for Siri

Apple is pushing the boundaries of artificial intelligence (AI) with its latest research and development efforts. According to a report by the New York Times, the tech giant is currently exploring generative AI concepts that could eventually be used to make Siri more powerful and effective. Generative AI is a form of artificial intelligence that…

-

WebScraping As Sourcing Technique For NLP

Introduction In this post, we provide a series of web scraping examples and reference for people looking to bootstrap text for a language model. The advantage is that a greater number of spoken speech domains could be covered. Newer vocabulary or possibly very common slang is picked up through this method since most corporate language…

-

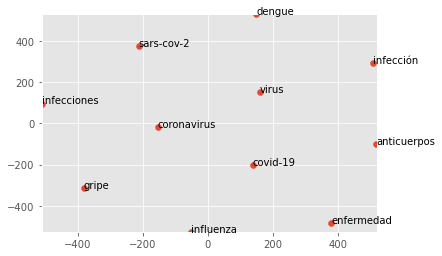

Word2Vec Mexican Spanish Model: Lyrics, News Documents

A Corpus That Contains Colloquial Lyrics & News Documents For Mexican Spanish This experimental dataset was developed by 4 Social Science specialists and one industry expert, myself, with different samples from Mexico specific news texts and normalized song lyrics. The intent is to understand how small, phrase level constituents will interact with larger, editorialized style…

-

Tokenizing Text, Finding Word Frequencies Within Corpora

One way to think about tokenization is to consider it as finding the smallest possible unit of analysis for computational linguistics tasks. As such, we can think of tokenization as among the first steps (along with normalization) in the average NLP pipeline or computational linguistics analysis. This process helps break down text into a manner…

-

Frequency Counts For Named Entities Using Spacy/Python Over MX Spanish News Text

On this post, we review some straightforward code written in python that allows a user to process text and retrieve named entities alongside their numerical counts. The main dependencies are Spacy, a small compact version of their Spanish language model built for Named Entity Recognition and the tabular data processing library, Matplotlib, if you’re looking…

-

N-Gram Analysis Over Sensitive Topics Corpus

I was recently able to do some analysis over the Sugar Bear AI violence corpus, a collection of documents classified by analysts over at the SugarBear AI group. The group has been classifying manually thousands of documents of Mexican Spanish news over the past year that deal with the new topics of today: “Coronavirus”, “WFH”,…

-

Using Spacy in Python To Extract Named Entities in Spanish

The Spacy Small Language model has some difficulty with contemporary news text that are not either Eurocentric or US based. Likely, this lack of accuracy with contemporary figures owes in part to a less thorough scrape of Wikipedia and relative changes that have taken place in Mexico, Bolivia and other countries with highly variant dialects…

Recent Posts

- Microsoft Ends GPT Builder in Copilot Pro – Damn!

- California Sets Pace with 30 Proposed AI Regulation Laws; Some Say Premature

- The Ongoing Race for Open-source Artificial Intelligence Innovation – Recent Developments

- Si eres lingüista, no te preocupes demasiado por las matemáticas formales en el procesamiento del lenguaje natural (PLN).

- If You’re A Linguist, Dont’ Sweat The Formal Math (Too Much) in Natural Language Processing

Tags

Apple Artificial Intelligence Bill Gates business California Canada ChatGPT China Colombia Corridos belicos crime culture Dollar Donald trump drugs drug traffic Economy EE.UU. Elon Musk environment fentanyl Florida Gustavo Petro Human rights inmigrants Joe Biden Latin music LGBTQ Luis Conriquez Mexico MICROSOFT Music New York Policy Ron DeSantis RUSSIA San Francisco Security technology Ukraine Ukraine crisis united states Violence WAR Wildfires