Introduction

In this post, we provide a series of web scraping examples and reference for people looking to bootstrap text for a language model. The advantage is that a greater number of spoken speech domains could be covered. Newer vocabulary or possibly very common slang is picked up through this method since most corporate language managers do not often interact with this type of speech.

Most people would not consider Spanish necessarily under resourced. However, considering the word error rate in products like the Speech Recognition feature on a Hyundai, Mercedes Benz or text classification generally on social media platforms, which is skewed towards English centric content, there seems to certainly be a performance gap between contemporary #Spanish speech in the US and products developed for that demographic of speakers.

Lyrics are a great reference point for spoken #speech. This contrasts greatly with long form news articles, which are almost academic in tone. Read speech also carries a certain intonation, which does not reflect the short, abbreviated or ellipses patterning common to spoken speech. As such, knowing how to parse the letras.com pages may be a good idea for those refining and expanding language models with “real world speech”.

Overview:

- Point to Letras.com

- Retrieve Artist

- Retrieve Artist Songs

- Generate individual texts for songs until complete.

- Repeat until all artists in artists file are retrieved.

The above steps are very abbreviated and even the description below perhaps too short. If you’re a beginner, feel free to reach out to lezama@lacartita.com. I’d rather deal with the beginner more directly; experienced python programmers should have no issue with the present documentation or modifying the basic script and idea to their liking.

Sourcing

In NLP, the number one issue will never be a lack of innovative techniques, community or documentation for commonly used libraries. The number one issue is and will continue to be a proper sourcing and development of training data.

Many practitioners have found that the lack of accurate, use case specific data are better than a generalized solution, like BERT or other large language models. These issues are most evident in languages, like Spanish, that do not have as high of a presence in the resources that compose BERT, like Wikipedia and Reddit.

Song Lyrics As Useful Test Case

At a high level, we created a list of relevant artists: Artists then looped through the list to search in lyrics.com whether they had any songs for them. Once we found that the request yielded a result, we looped through the individual songs for each artists.

Lyrics are a great reference point for spoken speech. This contrasts greatly with long form news articles, which are almost academic in tone. Read speech also carries a certain intonation, which does not reflect the short form, abbreviated or ellipsis that characterizes spoken speech. As such, knowing how to parse the https://letras.com resource may be a good idea for those refining and expanding language models with “real world speech”.

Requests, BS4

The proper acquisition of data can be accomplished with BeautifulSoup. The library has been around for over 10 years and it offers an easy way to process HTML or XML parse trees in python; you can think of BS as a way to acquire the useful content of an html page – everything bounded by tags. The requests library is also important as it is the way to reach out to a webpage and extract the entirety of the html page.

# -*- coding: utf-8 -*-

"""

Created on Sat Oct 16 22:36:11 2021

@author: RicardoLezama.com

"""

import requests

artist = requests.get("https://www.letras.com").textThe line `’requests.get(“https://letras.com”).text` does what the attribute ‘text’ implies; the call obtains the HTML files content and makes it available within the python program. Adding a function definition helps group this useful content together.

Functions For WebScraping

Creating a bs4 object is easy enough. Add the link reference as a first argument, then parse each one of these lyric pages on DIV. In this case, link=”letras.com” is the argument to pass along for the function. The function lyrics_url returns all the div tags with a particular class value. That is the text that contains the artists landing page, which itself can be parsed for available lyrics.

def lyrics_url(web_link):

"""

This helps create a BS4 object.

Args: web_link containing references.

return: text with content.

"""

artist = requests.get(web_link).text

check_soup = BeautifulSoup(artist, 'html.parser')

return check_soup.find_all('div', class_='cnt-letra p402_premium')

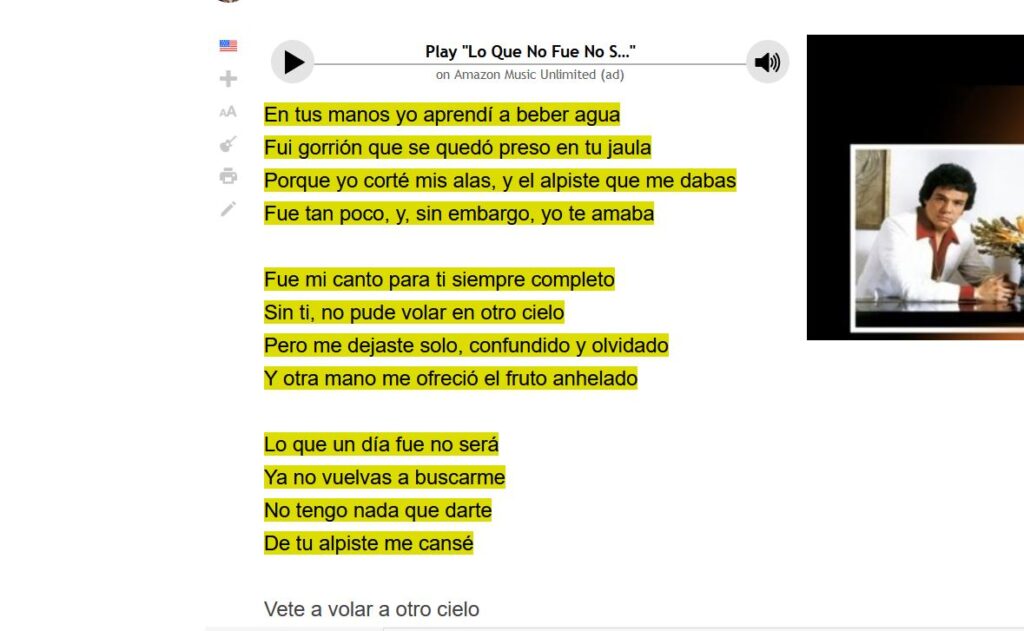

The image above shows the content within a potential argument for lyrics_url “https://www.letras.com/jose-jose/135222/”. See the github repository for more details.

Organizing Content

Drilling down to a specific artist requires basic knowledge of how Letras.com is set-up for organizing songs into a artists home page. The method artists_songs_url involves parsing through the entirety of a given artists song lists and drilling down further into the specific title.

In the main statement, we can call all these functions to loop through and iterate through the artists page and song functions to generate unique files, names for each song and its lyrics. The function generate_text will write into each individual one set of lyrics. Later, for Gensim, we can turn each lyrics file into a single coherent gensim list.

def artist_songs_url(web_link):

"""

This helps land into the URL's of the songs for an artist.'

Args: web link is the

Return songs from https://www.letras.com/gru-;/

"""

artist = requests.get(web_link).text

print("Status Code", requests.get(web_link).status_code)

check_soup = BeautifulSoup(artist, 'html.parser')

songs = check_soup.find_all('li', class_='cnt-list-row -song')

return songs

#@ div class="cnt-letra p402_premium

def generate_text(url):

import uuid

songs = artist_songs_url(url)

for a in songs:

song_lyrics = lyrics_url(a['data-shareurl'])

print (a['data-shareurl'])

new_file = open(str(uuid.uuid1()) +'results.txt', 'w', encoding='utf-8')

new_file.write(str(song_lyrics[0]))

new_file.close()

print (song_lyrics)

return print ('we have completed the download for ', url )

def main():

artistas = open('artistas', 'r', encoding='utf-8').read().splitlines()

url = 'https://www.letras.com/'

for a in artistas :

generate_text(url + a +"/")

print ('done')

#once complete, run copy *results output.txt to consolidate lyrics into a single page.

if __name__ == '__main__':

sys.exit(main()) #