Category: Tokenization

-

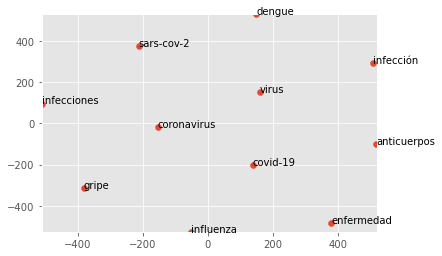

Word2Vec Mexican Spanish Model: Lyrics, News Documents

A Corpus That Contains Colloquial Lyrics & News Documents For Mexican Spanish This experimental dataset was developed by 4 Social Science specialists and one industry expert, myself, with different samples from Mexico specific news texts and normalized song lyrics. The intent is to understand how small, phrase level constituents will interact with larger, editorialized style…

-

Tokenizing Text, Finding Word Frequencies Within Corpora

One way to think about tokenization is to consider it as finding the smallest possible unit of analysis for computational linguistics tasks. As such, we can think of tokenization as among the first steps (along with normalization) in the average NLP pipeline or computational linguistics analysis. This process helps break down text into a manner…

Recent Posts

- Microsoft Ends GPT Builder in Copilot Pro – Damn!

- California Sets Pace with 30 Proposed AI Regulation Laws; Some Say Premature

- The Ongoing Race for Open-source Artificial Intelligence Innovation – Recent Developments

- Si eres lingüista, no te preocupes demasiado por las matemáticas formales en el procesamiento del lenguaje natural (PLN).

- If You’re A Linguist, Dont’ Sweat The Formal Math (Too Much) in Natural Language Processing

Tags

Apple Artificial Intelligence Bill Gates business California Canada ChatGPT China Colombia Corridos belicos crime culture Dollar Donald trump drugs drug traffic Economy EE.UU. Elon Musk environment fentanyl Florida Gustavo Petro Human rights inmigrants Joe Biden Latin music LGBTQ Luis Conriquez Mexico MICROSOFT Music New York Policy Ron DeSantis RUSSIA San Francisco Security technology Ukraine Ukraine crisis united states Violence WAR Wildfires